A US Defense Advanced Research Projects Agency (DARPA) investigation has put forward a new theory for enabling autonomous military systems to learn faster and more efficiently.

Autonomous systems learn by troubleshooting in a risk averse setting in simulated environments. However, this method can take months or even years to fine-tune and this makes autonomous systems vulnerable when faced with unknown situations or observations in the real world.

DARPA experts have taken a step back to consider the purpose of high and low-fidelity simulations. They found that while high-fidelity simulations train autonomous systems through a memorisation approach to learning, low-fidelity environments train systems in a more general way, making them more flexible to differences in environments.

“Modelling everything in high fidelity makes it so the AI [artificial intelligence] agent overfits to the dynamics of the simulation,” said Dr Alvaro Velasquez, DARPA’s programme manager for the effort. “When you go to the real world, nothing looks exactly like what you modelled/simulated. We want generalisable autonomy across a variety of platforms and domains.”

Moreover, the agency theorises that learning and transferring autonomy across diverse, low-fidelity simulations can lead to a more rapid transfer of autonomy from simulation to reality – “perhaps even as early as the same-day versus weeks or months with traditional approaches.”

Testing autonomous systems

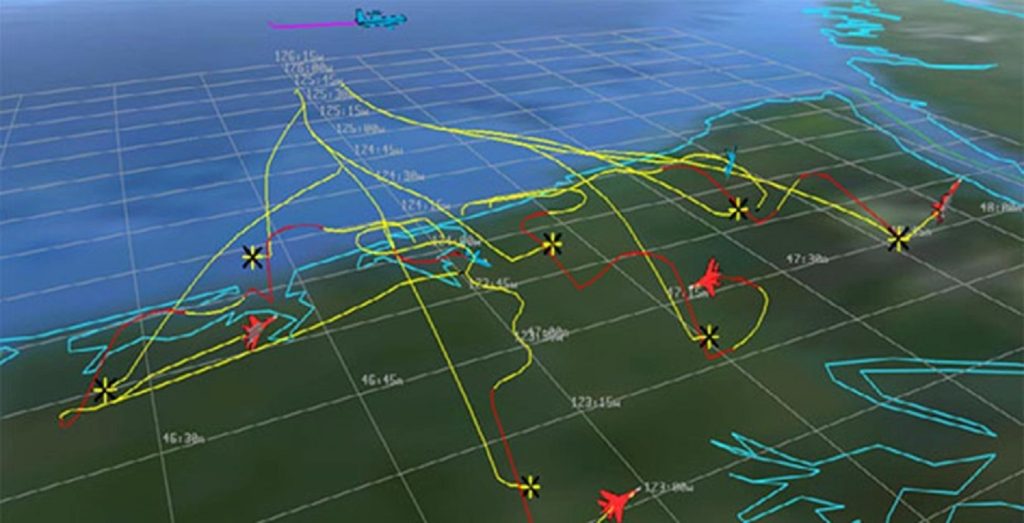

AI applications in defence cover a range of systems from unmanned autonomous vehicles to intelligence, surveillance and reconnaissance sensor systems.

GlobalData estimates the total AI market will be worth $383.3bn in 2030, implying a 21% compound annual growth rate between 2022 and 2030.

DARPA’s effort to determine appropriate simulation conditions to train autonomous systems for a specific purpose may open a new market venture in the machine learning sector.

DARPA’s autonomous system training programme will feature competitions at the end of phases 1 and 2, respectively, with the results of the first competition being used to down-select from six performers to three.

Phase 1 is 18 months and will develop sim-to-sim autonomy transfer techniques and novel methods for automatically developing or refining low-fidelity models and simulations to be used for transfer.

Phase 2 is 18 months and will develop sim-to-real autonomy transfer techniques and novel methods for automatically developing or refining low-fidelity models and simulations to be used for transfer. There will be two in-programme competitions corresponding to the two phases of the programme.